16-899A:

The Visual World as seen by the Neurons and Machines

|

Instructors: |

Logistics: |

Important Dates: |

|

Abhinav Gupta

(abhinavg@cs) EDSH 213 Elissa Aminoff (elissa@cnbc) |

Monday, Wednesday

(12:00-1:20pm) – NSH 3002 |

January 13 – Classes Begin |

Course Description:

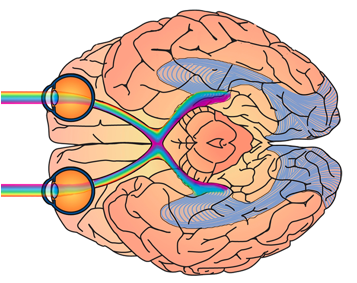

Why do things look the way they do? Why

is understanding the visual world, while so effortless

for humans, so excruciatingly difficult for computers? What insights from the

human visual system can we use in computer vision? Can we verify machine

representation using human subjects? How can computer vision help understand

human vision? What are the advantages of combining computer vision with our

understanding of the neural mechanisms underlying human vision?

In this course, through lectures, paper

presentations, and projects, we will explore what we understand about human

vision and how that can help in designing computational perception algorithms.

We will also explore how assumptions underlying machine perception frameworks

can be verified using fMRI studies of human brain; and, whether machine

perception frameworks can provide a model to understand the cortical

representation of the visual world.

Blog Link:

http://16-899a-2014.blogspot.com

Project Data

Schedule:

|

Date |

Topic |

Presenter

+ Slide Links |

Reading |

|

Jan 13 |

Introduction to

Class – A Computer Vision Perspective |

Abhinav |

- |

|

Jan 15 |

Introduction

– A Neuroscience Perspective, Philosophies in

Neuroscience |

Elissa[Slides] |

- |

|

Jan 20 |

MLK Day –

No CLASS |

- |

- |

|

Jan 22 |

Physiology

– From Retina to V1 to High-Level Areas |

Elissa [Slides] |

- |

|

Jan 27,29 |

Philosophies

– Theories behind human vision |

Abhinav [Slides] |

- |

|

Feb 03 |

No Class –

Attend Dickinson Prize Lecture |

|

- |

|

Feb 05 |

Neuroscience

Overview - Methods and Approaches |

Elissa [Slides] |

- |

|

Feb 10 |

Neuroscience

Overview – Contd, Case Study |

- |

|

|

Feb 12,17 |

Object Recognition in Computer Vision

- Issues and Discussions |

Abhinav |

- |

|

Feb 19 |

Object Recognition: (1) Semantic

Categories |

|

á

[Gallant] Huth et al., A

Continuous Semantic Space Describes

the Representation of Thousands of

Object and Action Categories across the Human Brain, Neuron 2012 [PDF] á

Haxby et al,

Distributed and Overlapping Representations of Faces and Objects in Ventral Temporal Cortex, Science 2001. [PDF] á

IMAGENET: Imagenet: A Large Scale Hierarchical

Image Database, [PDF] |

|

Feb 26 |

Object Recognition - Categories to

Attributes |

|

á

[Mitchell] Palatucci et

al., Zero-Shot Learning with Semantic Output Codes, NIPS 2012 [PDF] á

Kriegeskorte et al. Matching Categorical Object

Representations in Inferior Temporal Cortex of Man and Monkey. Neuron 2008 [PDF] á

[Attributes] Farhadi et al., Describing Objects by their

Attributes, CVPR 2009 [PDF] |

|

March 03 |

Object Recognition – Functional

Categories |

|

á

[Mahon] Action-Related Properties Shape Object

Representations in the Ventral Stream, Neuron 2007. [PDF] á

Mahon,

A connectivity constrained account of the representation and organization of

object concepts, Concepts: New Direction 2013 [PDF] á

Gupta et al., From 3D Scene Geometry to Human

Workspace, CVPR 2011. [PDF] |

|

March 05 |

Object Recognition – Semantic

Vs. Visual Cateogries |

|

á

[DiCarlo] Shape Similarity, Better than Semantic Membership,

Accounts for the Structure of Visual Object Representations in a Population

of Monkey Infero-temporal Neurons, Computational

Biology 2013. [PDF] á

Divvala et al., How important are deformable parts in deformable part model?, ECCV Parts and Attributes Workshop 2012. [PDF] |

|

March 17

|

Projects

|

- |

|

|

March 19

|

Categories vs. Exemplars |

á

[Koustaal] Perceptual specificity in visual object

priming: functional magnetic resonance imaging evidence for a laterality

difference in fusiform cortex, Neuropsychologia

2001. [PDF] á Torralbo, Walther, Chai, Caddigan,

Fei-Fei, Beck, 2013, Good exemplars of natural

scene cateogires elicit clearer patterns than bad exemplars but not greater

BOLD activity [PDF] á Malisiewicz et al., Ensemble of Exemplar-SVMs for

Object Detection and Beyond, ICCV 2011 [PDF] |

|

|

March 24

|

Invariances in Recognition –

Mid Level Features |

|

á [DiCarlo] Rust et al., Selectivity and

Tolerance (ÒInvarianceÓ) Both Increase as Visual Information Propagates from

Cortical Area V4 to IT. Neuroscience 2010. [PDF] á Deep network for Object Detection

[PDF] á Risenhuber et al., HMAX Model.

[PDF] á |

|

March 26

|

Scenes in Computer Vision and Neuroscience

|

Abhinav + Elissa [Slides] |

No

Readings |

March 31

|

Scenes – Structure + Content

|

|

·

Kravitz, Real-World

Scene Representations in High-Level Visual Cortex: It’s the Spaces More Than

the Places, 2011 [PDF]

·

Park and Oliva, Disentangling scene

content from its spatial boundary: Complementary roles for the PPA and LOC in

representing real-world scenes, [PDF]

·

Genevieve Patterson, James Hays. SUN Attribute Database:

Discovering, Annotating, and Recognizing Scene Attributes. Proceedings of

CVPR 2012. [PDF]

|

Apr 02

|

Scenes – Object Based

Representations

|

|

·

Harel et al., 2013, Deconstructing Visual

Scenes in Cortex: Gradients of Object and Spatial Layout Information.

Cerebral Cortex. [PDF]

·

Stansbury 2013. Natural Scene Statistics Account

for

the Representation of Scene Categories in Human Visual Cortex [PDF]

·

Li and Fei-Fei,

Object Bank: A High-Level Image Representation for Scene Classification &

Semantic Feature Sparsification, [PDF]

|

|

April 07 |

Mid-term Class Presentations |

|

|

|

April 09 |

Scenes – Objects [2] |

|

á Janzen, Selective Neural Representation

of Objects Relevant for Navigation, 2004 [PDF] á Bar 2008, Scenes Unseen: The Parahippocampal Cortex

Intrinsically Subserves Contextual Associations,

Not Scenes or Places Per Se [PDF] |

|

April 14

|

Information Processing (IP): Top-Down

vs. Bottom-Up |

|

á Bar 2006, Top-down facilitation of visual recognition [PDF] á Bar 2007, The proactive brain: using

analogies and associations to generate predictions [PDF] á Malisiewicz, Beyond Categories: The Visual Memex Model for Reasoning About Object Relationships,

NIPS 2011 [PDF] |

|

April 16 |

Guest Lecture: Biological Motion |

John |

|

April 21

|

IP – Attention

|

|

·

Reynolds 2003, Interacting

Roles of Attention and Visual Salience in V4. [PDF]

·

Maunsell 2006, Feature-based attention in visual

cortex. [PDF].

·

Judd et al, Learning to predict where people look, [PDF]

|

|

April 23

|

Role of Context |

|

á Kverga 2011, Early

onset of neural synchronization in the contextual associations network. [PDF] á Aminoff, The Cortical Underpinnings of

Context-based Memory Distortion, 2008 [PDF] á Tu, Autocontext

and its application to High-Level Vision Tasks, [PDF] |

|

April 28

|

How far does

top-down travel? V1? |

|

á Murray et al., The representation of

perceived angular size in human primary visual cortex. Nature Neuroscience

2012 [PDF] á Borenstein et al., Combining Top-Down and Bottom-Up

Segmentation, [PDF] |

|

April 30 |

Last Lecture |

Abhinav Gupta |

|

|

|

Project Presentations |

|

|

Extra Readings:

First

two Weeks of Feb:

á

Ungerleider, Andrew H.

Bell, Uncovering the visual ÔÔalphabetÓ: Advances in our understanding of

object perception [PDF]

á

Martin,

Circuits in Mind: The Neural Foundations for Object Concepts, 2009 [PDF]