(Graphics Interface, May 2004 )

J.

Barbic and A. Safonova

and J.Y. Pan

and C. Faloutsos

and J. K. Hodgins and N.

S. Pollard

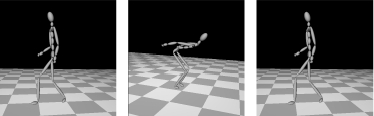

walking, forward jumping, walking,

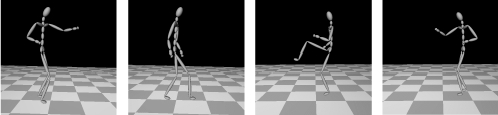

arm punching, walking, leg kicking, and arm punching

J.

Barbic and A. Safonova

and J.Y. Pan

and C. Faloutsos

and J. K. Hodgins and N.

S. Pollard

walking, forward jumping, walking,

arm punching, walking, leg kicking, and arm punching

Abstract:

Much of the motion capture data used in animations, commercials, and

video games is carefully segmented into distinct motions either at the

time of capture or by hand after the capture session. As we move toward

collecting more and longer motion sequences, however, automatic segmentation

techniques will become important for processing the results in a reasonable

time frame. We have found that straightforward, easy to implement segmentation

techniques can be very effective for segmenting motion sequences into distinct

behaviors. In this paper, we present three approaches for automatic segmentation.

The first two approaches are online, meaning that the algorithm traverses

the motion from beginning to end, creating the segmentation as it proceeds.

The first assigns a cut when the intrinsic dimensionality of a local model

of the motion suddenly increases. The second places a cut when the distribution

of poses is observed to change. The third approach is a batch process and

segments the sequence where consecutive frames belong to different elements

of a Gaussian mixture model. We assess these three methods on fourteen

motion sequences and compare the performance of the automatic methods to

that of transitions selected manually.

Paper

(pdf; 200K)

Movies showing segmentation of

two motion sequences:

Movie1:

Quicktime (mp4; 5 MB),

DivX (avi; 2.5 MB),

Intel Indeo Video 5 (avi; 13 MB)

Movie2:

Quicktime (mp4; 10 MB),

DivX (avi; 5 MB),

Intel Indeo Video 5 (avi; 21 MB)

Graphics Interface 2004 Presentation Slides:

Powerpoint presentation + movies

(ppt; 46 MB)

There are 62 DOFs in the AMC files in the CMU motion capture database. There are 29 joints total (with root position and orientation counted as one joint). The root joint is 6-dimensional, and the rest are either 1-,2-,or 3-dimensional. Here is a picture of the joints.

This file contains the joint data (angles) for all the 14 motion capture sequences used in our paper (62 DOFs per frame, stored in one row of the provided text files, at 120 frames per second). See the amc_to_matrix.m script (on the CMU motion capture database website, under "Tools") for the meaning of these DOFs.

This file contains our ground truth estimates for where the optimal segment boundaries should be. See "key.txt" for ground truth file syntax.

We manually removed some of the joints close to the end effectors because the data at these joints was low-quality due to the insufficient number of markers. We retained 14 joints and used them in our method. Each joint is represented as a quaternion, regardless of whether the joint is 1-, 2-, or 3-dimensional. So this makes up for our 14x4=56-dim frames as input to our segmentation algorithm.

The following 14 joints were retained:

'lowerback', 'upperback', 'thorax', 'lowerneck', 'upperneck', 'head', 'rhumerus', 'rradius', 'lhumerus', 'lradius', 'rfemur', 'rtibia', 'lfemur', 'ltibia',You can look into our amc_to_matrix.m script (on the CMU motion capture database website, under "Tools") to find out where in the 62-dimensional vector each of these joints is located.

Last modified: March 24, 2008