Online

Expression Control

Real-time

animation and control of three-dimensional facial expressions using a single

video camera

|

|

Project

description

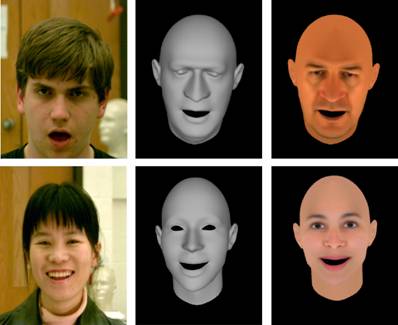

Controlling and animating the facial

expression of a computer-generated 3D character is a difficult problem because

the face has many degrees of freedom while most available input devices have

few. In this paper, we show that a rich set of lifelike facial actions can be

created from a preprocessed motion capture database and that a user can control

these actions by acting out the desired motions in front of a video camera. We

develop a real-time facial tracking system to extract a small set of animation

control parameters from video. Because of the nature of video data, these

parameters may be noisy, low-resolution, and contain errors. The system uses

the knowledge embedded in motion capture data to translate these low-quality 2D

animation control signals into high-quality 3D facial expressions. To adapt the

synthesized motion to a new character model, we introduce an efficient

expression retargeting technique whose run-time computation is constant

independent of the complexity of the character model. We demonstrate the power

of this approach through two users who control and animate a wide range of 3D

facial expressions of different avatars.

Publications

- Vision-based

Control of 3D Facial Animation, ACM SIGGRAPH/Eurographics

Symposium on Computer Animation (SCA 2003)

Videos, slides and data

- Two users

control and animate two avatars (15.6 MB avi

clip, no audio)

- PowerPoint (10.4M)

Project members

Related projects

- Performance Animation from

Low-dimensional Control Signals, ACM Transactions on

Graphics (SIGGRAPH 2005)

- Interactive Control of

Avatars Animated with Human Motion Data, ACM Transactions on

Graphics (SIGGRAPH 2002)

Acknowledgement

Supported in part by the NSF under Grant

IIS-0205224 and IIS-0326322.