http://graphics.cs.cmu.edu/projects/controllerCapture/

Video-based 3D Motion Capture through Biped Control

Presented at SIGGRAPH, 2012

People

Abstract

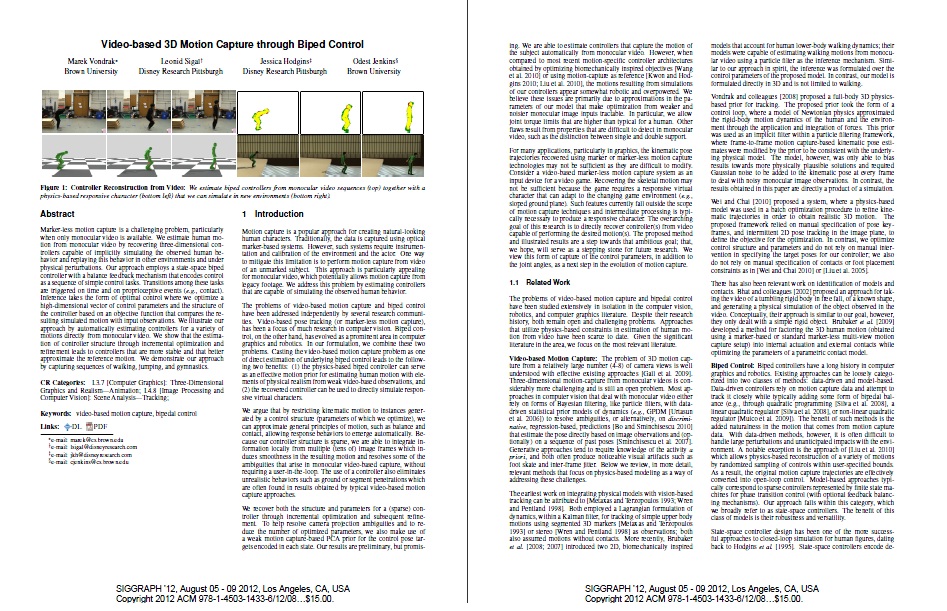

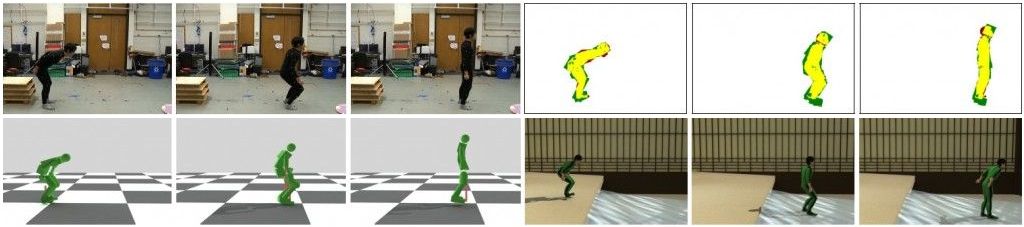

Marker-less motion capture is a challenging problem, particularly when monocular video is all that is available. We estimate biped control from monocular video by implicitly recovering physically plausible three-dimensional motion of a subject along with a character model (controller) capable of replaying this motion in other environments and under physical perturbations. Our approach consists of a state-space biped controller with a balance feedback mechanism that encodes control as a sequence of simple control tasks. Transitions among these tasks are triggered on time and proprioceptive events (e.g., contact). Inference takes the form of optimal control where we optimize (incrementally and/or in a batch) a high-dimensional vector of control parameters and structure of the controller based on an objective function that measures consistency of the resulting simulated motion with input observations. We illustrate our approach by automatically estimating controllers for a variety of motions directly from monocular video. To decouple errors introduced from tracking in video from errors introduced by the controllers, we also test on reference motion capture data. We show that estimation of controller structure through incremental optimization and refinement leads to controllers that are more stable and that better approximate the reference motion. We demonstrate our approach by capturing sequences of walking, jumping, and gymnastics. We evaluate the results through qualitative and quantitative comparisons to video and motion capture data.

Paper

Video

Full size movie (QuickTime, 85 MB)

BibTeX

@article{vondrak-siggraph2012,

author = {Vondrak, Marek and Sigal, Leonid and Hodgins, Jessica and Jenkins, Odest},

title = {Video-based 3D Motion Capture through Biped Control},

journal = {ACM Transaction of Graphics (TOG) (Proceedings of ACM SIGGRAPH)},

year = {2012},

volume = {?},

number = {?},

abstract = "Marker-less motion capture is a challenging problem, particularly

when monocular video is all that is available. We estimate biped control from

monocular video by implicitly recovering physically plausible three-dimensional

motion of a subject along with a character model (controller) capable of

replaying this motion in other environments and under physical perturbations.

Our approach consists of a state-space biped controller with a balance feedback

mechanism that encodes control as a sequence of simple control tasks.

Transitions among these tasks are triggered on time and proprioceptive events

(e.g., contact). Inference takes the form of optimal control where we optimize

(incrementally and/or in a batch) a high-dimensional vector of control

parameters and structure of the controller based on an objective function that

measures consistency of the resulting simulated motion with input observations.

We illustrate our approach by automatically estimating controllers for a

variety of motions directly from monocular video. To decouple errors

introduced from tracking in video from errors introduced by the controllers, we

also test on reference motion capture data. We show that estimation of

controller structure through incremental optimization and refinement leads to

controllers that are more stable and that better approximate the reference

motion. We demonstrate our approach by capturing sequences of walking,

jumping, and gymnastics. We evaluate the results through qualitative and

quantitative comparisons to video and motion capture data.",

image = "http://graphics.cs.cmu.edu/projects/controllerCapture/images/teaser_small.png",

iconImage = "http://graphics.cs.cmu.edu/projects/controllerCapture/images/teaser_tiny.png",

links = "http://graphics.cs.cmu.edu/projects/controllerCapture/",

sidebarImage = "http://graphics.cs.cmu.edu/projects/controllerCapture/images/teaser_small.png",

sidebarText = "We capture a motion of a person from a single camera view by

estimating a physics-based controller for a character that would imitate the

observed behavior."

}

Funding

This research is supported by:

- ONR PECASE Award N000140810910

- ONR YIP Award N000140710141

- Disney

Comments, questions to Marek Vondrak