Data Driven Grasp Synthesis using Shape Matching and Task-based Pruning

| Ying Li | Jiaxin L. Fu | Nancy S. Pollard |

IEEE Transactions on Visualization and Computer Graphics (2007)

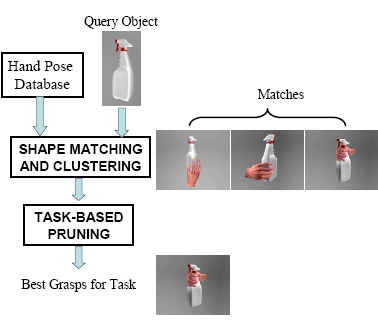

Human grasps, especially whole-hand grasps, are difficult to animate because of the high number of degrees of freedom of the hand and the need for the hand to conform naturally to the object surface. Captured human motion data provides us with a rich source of examples of natural grasps. However, for each new object, we are faced with the problem of selecting the best grasp from the database and adapting it to that object. This paper presents a data-driven approach to grasp synthesis. We begin with a database of captured human grasps. To identify candidate grasps for a new object, we introduce a novel shape matching algorithm that matches hand shape to object shape by identifying collections of features having similar relative placements and surface normals. This step returns many grasp candidates, which are clustered and pruned by choosing the grasp best suited for the intended task. For pruning undesirable grasps, we develop an anatomically based grasp quality measure specific to the human hand. Examples of grasp synthesis are shown for a variety of objects not present in the original database. This algorithm should be useful both as an animator tool for posing the hand and for automatic grasp synthesis in virtual environments.

Ying Li, Jiaxin L. Fu, Nancy S. Pollard (2007). Data Driven Grasp Synthesis using Shape Matching and Task-based Pruning. IEEE Transactions on Visualization and Computer Graphics.

@article{Li:inpress:ShaMatchTask,

author = "Ying Li and Jiaxin L. Fu and Nancy S. Pollard",

title = "Data Driven Grasp Synthesis using Shape Matching and Task-based Pruning",

year = 2007,

journal = "IEEE Transactions on Visualization and Computer Graphics",

note = "In press",

}