A Deep Learning Approach for Generalized Speech Animation

| Sarah Taylor | Taehwan Kim | Yisong Yue | Moshe Mahler | James Krahe | Anastasio Garcia Rodriguez | Jessica Hodgins | Iain Matthews |

ACM Transactions on Graphics (2017)

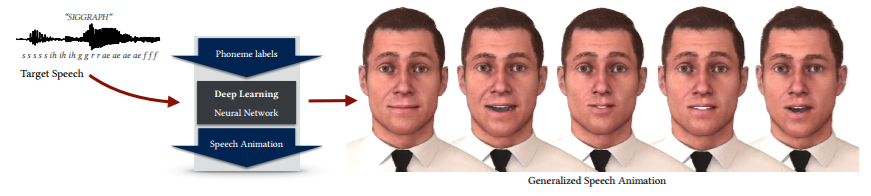

We introduce a simple and effective deep learning approach to automatically generate natural looking speech animation that synchronizes to input speech. Our approach uses a sliding window predictor that learns arbitrary nonlinear mappings from phoneme label input sequences to mouth movements in a way that accurately captures natural motion and visual coarticulation effects. Our deep learning approach enjoys several attractive properties: it runs in real-time, requires minimal parameter tuning, generalizes well to novel input speech sequences, is easily edited to create stylized and emotional speech, and is compatible with existing animation retargeting approaches. One important focus of our work is to develop an effective approach for speech animation that can be easily integrated into existing production pipelines. We provide a detailed description of our end-to-end approach, including machine learning design decisions. Generalized speech animation results are demonstrated over a wide range of animation clips on a variety of characters and voices, including singing and foreign language input. Our approach can also generate on-demand speech animation in real-time from user speech input.

Sarah Taylor, Taehwan Kim, Yisong Yue, Moshe Mahler, James Krahe, Anastasio Garcia Rodriguez, Jessica Hodgins, Iain Matthews (2017). A Deep Learning Approach for Generalized Speech Animation. ACM Transactions on Graphics, 36(4).

@article{Hodgins:2017:DOE,

author={Sarah Taylor and Taehwan Kim and Yisong Yue and Moshe Mahler and James Krahe and Anastasio Garcia Rodriguez and Jessica Hodgins and Iain Matthews},

title={A Deep Learning Approach for Generalized Speech Animation},

journal={ACM Transactions on Graphics},

volume={36},

number={4},

year={2017},

}