Object

Space EWA Surface Splatting

Project Description

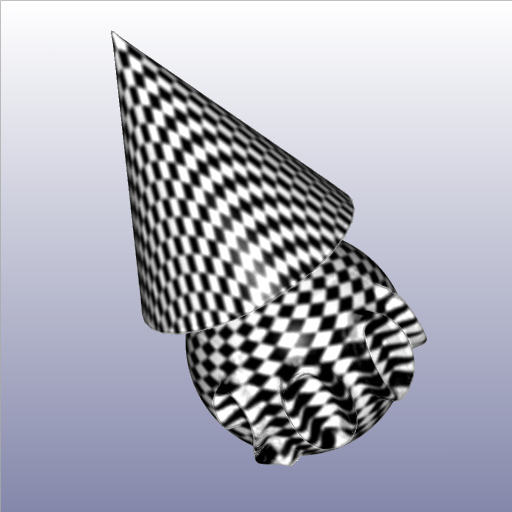

Point-based surface representations are very well suited to the output-sensitive rendering of large and complex 3D models. However, interactive point rendering with high quality anisotropic texture filtering had been very challenging due to the lack of direct hardware support (most GPUs were designed for triangle-based representations). Object Space EWA Surface Splatting provided the first hardware solution to this problem. Even though Elipitical Weighted Average (EWA) Filtering could provide very high quality anti-aliasing, it was not supported by graphics hardware due to its expensive computational cost. We made it possible for point-based representations using programmable GPU for the first time. We derived an object space formulation of the EWA filter, called object space EWA filter. Then we developed a two-pass rendering algorithm to implement the object space EWA filter with programmable GPU support (vertex shader 1.0 and pixel shader 1.0). We presented results for several point-based models to demonstrate the high image quality and real-time rendering speed of our method.

Splatting Comparison

This video compares the quality of object space EWA filtering with the result when no texture filtering is applied. The point rendering is based on Nvidia's Geforce 4 Ti 4600 graphics card. ( If you can not see the video, please download Microsoft mpeg4 video codec. )

The second video compares the quality of object space EWA filtering for point-based representation with that of anisotropic texture filtering provided by today's graphics hardware for texture-mapped triangle meshes. On the left hand side of the video, the image is rendered using object space EWA splatting. Whereas on the right hand side, the image is rendered using texture-mapped triangles with the support of anisotropic texture filtering and multiple sampling. All the rendering is based on Nvidia's Geforce 4 Ti 4600 graphics card.

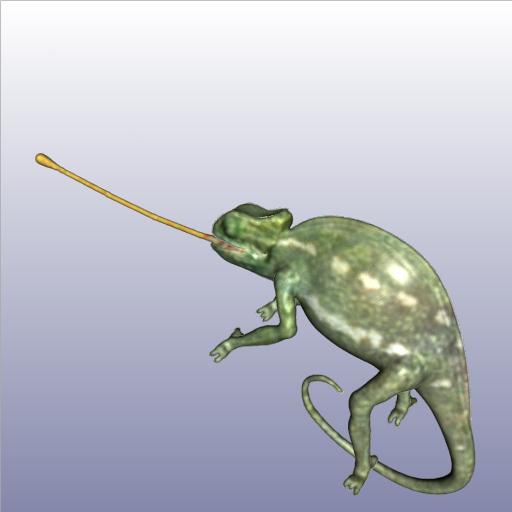

Surfel Models

The following videos are based on real-time screen capture with a image resolution of 1024*768. The surfel models are rendered using object space EWA surface splatting on ATI's Radeon 9700 graphics card. The blurring and noisy lines are artifacts caused by the real-time video capture and video compression.

Normalization Issues

The rendering artifacts may exist due to the irregular point distribution of surfel models. We use normalization to solve this problem. However, normalization in graphics hardware can be hard if appropriate features are not available (before 2003). This video shows our solution (per-surfel normalization) to remove normalization artifacts with the precomputed surfel weights. For better visualization of normalization effects, no shading is applied during rendering.

Alternative solutions include per-pixel normalization, which requires reading back the data from the frame buffer frequently. This video compares the differences between those three approaches, which are splatting without filtering, splatting with per-pixel normalization, and splatting with per-surfel normlization. The video is based on the real-time screen capture (ATI Radeon 8500 card).

Publication

-

Liu Ren, Hanspeter Pfister and Matthias Zwicker, "Object Space EWA Surface Splatting: A Hardware Accelerated Approach to High Quality Point Rendering", EUROGRAPHICS 2002 (Best paper nominee), Computer Graphics Forum, 21(3), 461--470, 2002, also available as MERL technical report TR2002-31. [BiBTeX]

PDF download: Electronic Version( 672 kb) ; Print Version ( 1 Mb)

Presentation

Eurographics 2002, Saarbrucken, Germany, September 2-6, 2002. PPT file download.

Related Projects

Adaptive EWA volume splatting;

Other Projects

Quantifying Natural Human Motion;

Vision-based Performance Animation;

Contact

Liu Ren (liuren@cs.cmu.edu, Carnegie Mellon University