|

|

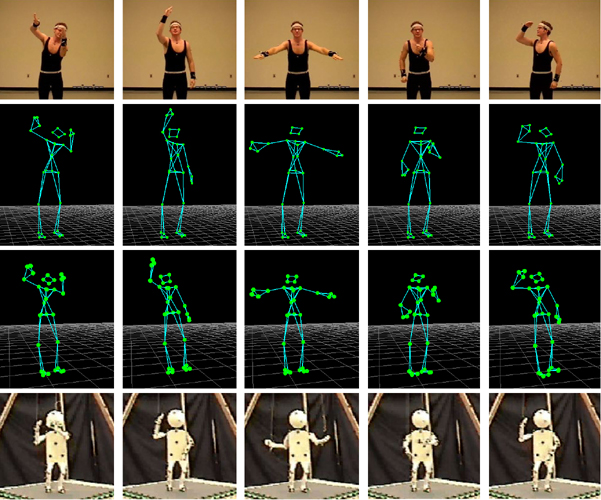

A motorized marionette is a marionette whose strings are driven by motors instead of hands and fingers in usual marionettes. If we could program such a marionette to imitate human motions, it would be a powerful and inexpensive device for entertainment purposes because operating such marionettes by hand usually requires hard practice. The objective of this project is to develop a control scheme for a motorized marionette to realize human-like and expressive motions.

There are two possible approaches to control a motorized marionette: (1) capture a real performance and replace the fingers by the motors, and (2) use human motion capture data as reference motion for the motorized marionette. The first approach could be easier to control because the recorded data should comply quite well with the kinematic and dynamic constraints of the marionette, but it is more difficult to obtain the data because the operator should practice the motion first. In the second approach, on the other hand, it is much easier to obtain the data but we have to modify the data so that the motion becomes feasible for the marionette. Currently, we are working on the second approach where we record a motion sequence of a human actor and transform the data to compensate for the kinematic and dynamic differences between the actor and the marionette, although we do realize that the first approach (using real performance data) is also important to capture the talent of an operator and learn how they modify human motions to make the marionette motions appear more expressive.

The main differences between human actors and the marionette are:

The control software is built to compensate for these significant differences and to realize human-like motions on the marionette.

We used four motions of two stories performed by two actors. Experimental results showed that the motions of the marionette is close enough to the reference motions to distinguish two different styles of the same story.

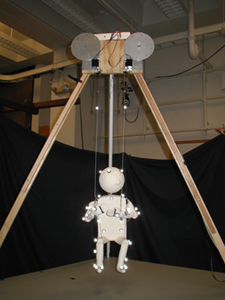

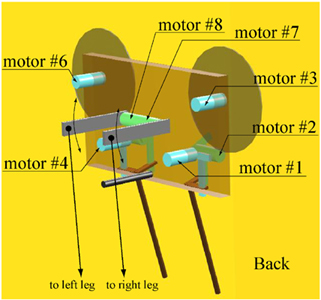

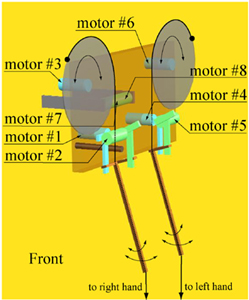

The marionette is driven by eight hobby servo motors, six for the arms and two for the legs. The motors accept angle commands with the resolution of approximately 180/256 degrees. The markers are attached only in the experiments for identifying the swing dynamics, not in actual performances.

|

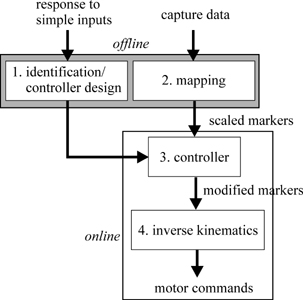

The control software is composed of the following four components:

|

|