ACM Transactions on Graphics, 32(4):87:1-7, July 2013.

Proceedings of ACM SIGGRAPH 2013, Anaheim.

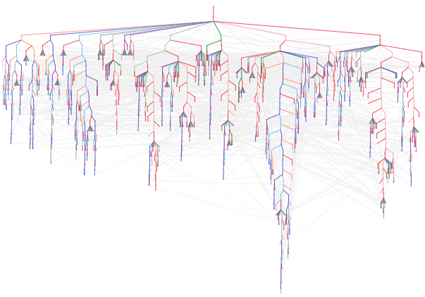

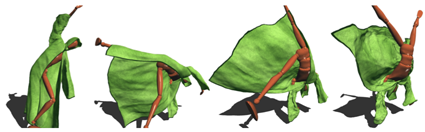

The central argument against data-driven methods in computer graphics rests on the curse of dimensionality: it is intractable to precompute "everything" about a complex space. In this paper, we challenge that assumption by using several thousand CPU-hours to perform a massive exploration of the space of secondary clothing effects on a character animated through a large motion graph. Our system continually explores the phase space of cloth dynamics, incrementally constructing a secondary cloth motion graph that captures the dynamics of the system. We find that it is possible to sample the dynamical space to a low visual error tolerance and that secondary motion graphs containing tens of gigabytes of raw mesh data can be compressed down to only tens of megabytes. These results allow us to capture the effect of high-resolution, off-line cloth simulation for a rich space of character motion and deliver it efficiently as part of an interactive application.

View MP4 (132 MB)

Download Paper (pdf, 5 MB)

TechCrunch: Researchers Create Near-Exhaustive, Ultra-Realistic Cloth Simulation

CNET: Computers sweat for 4,554 hours to simulate cloth movement