So, What Did We Miss?

This is our entire game development model as of game0: we author c++ code, hand it to a compiler to generate an executable, and send that executable to the player.

So what are we missing?

Last class, we talked about the game code we ship to the player.

But this misses a whole chunk of game programming code -- code that never reaches the player.

What does this code do?

Why is this important to think about?

This is our entire game development model as of game0: we author c++ code, hand it to a compiler to generate an executable, and send that executable to the player.

So what are we missing?

The answer -- for simple games -- can be nothing. But at some point, generating all of the sound, visuals, and story as c++ becomes limiting. It's these data files -- the assets that are part of our game -- that I'm going to talk about today.

An asset is any data that is used in a game. Images, sounds, 3D models, shaders, levels, dialog, scripts, ... the list continues -- basically, if you aren't hardcoding it, you might consider it an asset. (Arguably, heck, maybe even game code fits this model.)

But where do these assets come from?

One of the key ideas in game programming is the asset pipeline.

Assets are created in an editing format, which can be read and written by editing tools. It is read by a preprocessing tool and converted to a distribution format. Finally, the game code uses the runtime to use the distribution format.

Notice that there is something really elegant going on here: The authoring tools, data, and converters over on the left get run only during content creation, and only in the studio; the runtime format and code -- on the other hand -- are shipped with the game and run by players all the time.

Why would we care about this split? Because it means that all this stuff on the left can be hack-y, slow, computer-specific, over-complete, and non-robust. The stability of the final game only depends on the code on the right.

Further, the runtime code and format is the only code that needs to be multi-platform (and, indeed, can be platform-specific (Why would one want this? Accelerated mp3 vs ogg decoding; little vs big endian systems; texture compression formats).

In other words, the authoring code can prioritize authoring productivity and ease-of-development, while the runtime code and format can prioritize speed and simplicity.

When designing an asset pipeline, start from the ends:

Authoring Tool(s): Can I do this with Blender, GIMP, Inkscape, my favorite DAW, and/or a text editor? (And how will I use them?)

(Big idea: re-use existing tools rather than writing new ones. Blender, GIMP, Inkscape, and text editors are all great authoring tools.) (Big pitfall to avoid: premature workflow optimization. Sometimes "good enough" is, indeed, good enough.)

Runtime Code: How will I use the runtime code? (What does it look like to load an asset? What does it look like to use/draw/query the asset?)

In both cases -- write examples before writing a specification.

(Thing to think about: what tasks does the runtime code handle? Basically: loading + association + (possibly) display.) (Thing to think about: how/when to associate handles in your code with loaded assets?)

Runtime Format what does the hardware need?

(Big idea: make on-disc format look as much as possible like in-memory format.) (Big idea: you can do a lot with arrays [see later in this lecture].) (Big idea: when dealing with large files, consider using a well-known compressed format.) (Big pitfall to avoid: developing a runtime that does parsing. Runtime formats should be easier than that to load.)

Now that you know your authoring tools and runtime interface, you know what your processing script needs to do.

read_chunk?)Remember: internal scripting engines in your favorite tools (e.g. blender/python, GIMP/scheme).

Remember: you don't have to distribute processing code, and it doesn't have to work on everything, just a few things.

Remember: Makefiles and shell scripts. It's okay to have a multi-step process.

If your asset pipeline takes a long time or only works on a few machines, this is one place where checking generated binary files [your asset files] into git -- or looking for another versioned binary file store -- might make sense.

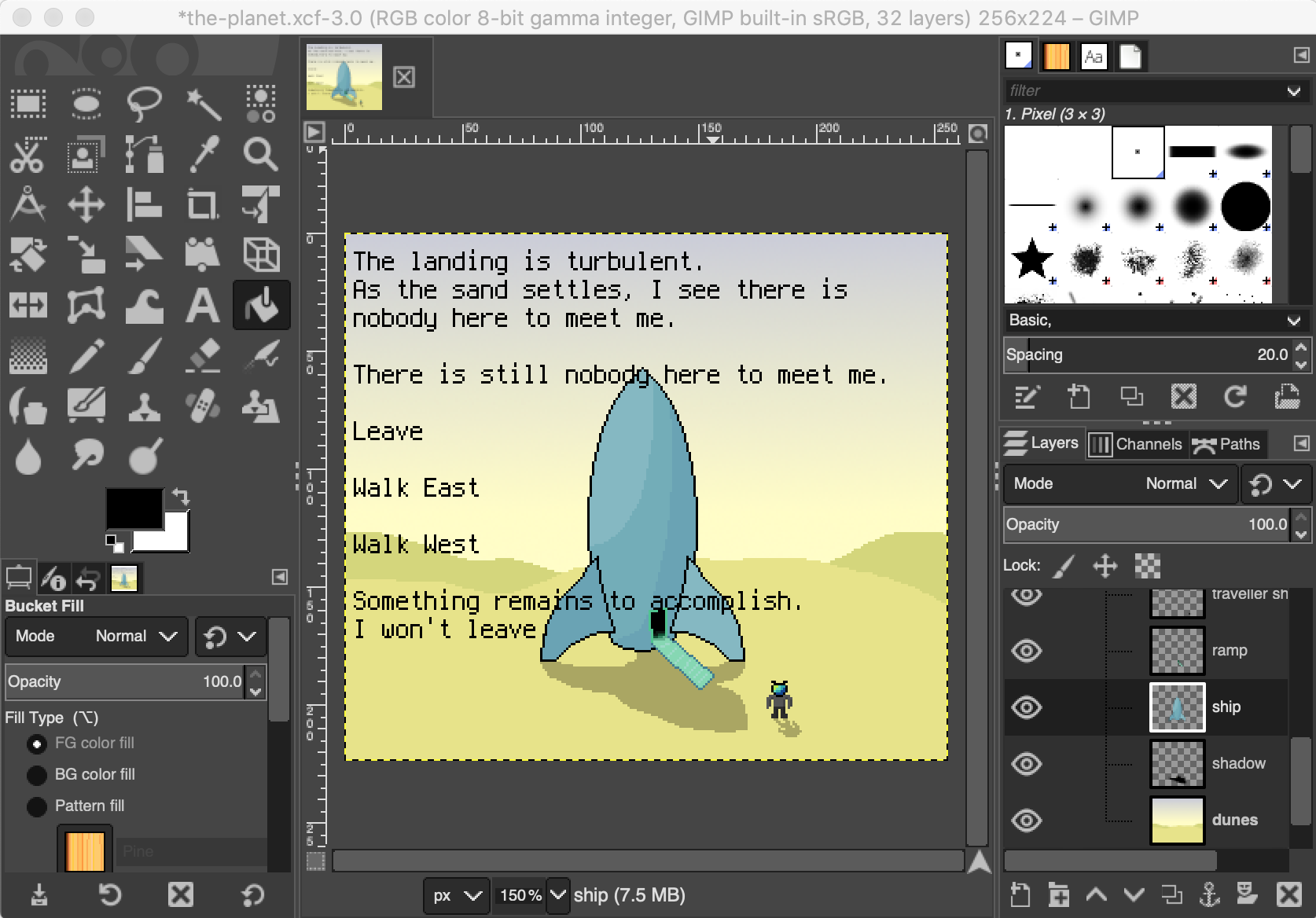

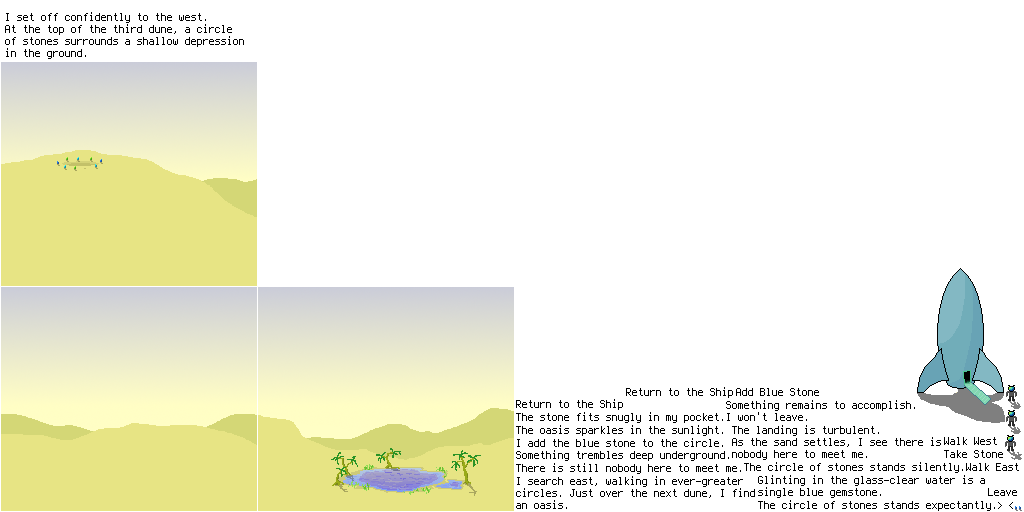

Authoring: GIMP + text file

Runtime: Sprite atlas with lookup functions

Processing: extract with python+scheme, pack with C++

Runtime Format: PNG + binary extents

Runtime Code: lookup/loading; drawing helper.