|

|

|

|

|

Programming Project #1 15-463: Computational

Photography |

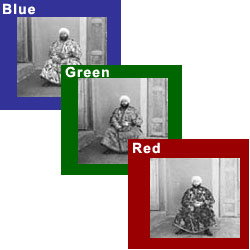

IMAGES OF THE RUSSIAN EMPIRE:

Colorizing

the Prokudin-Gorskii

photo collection

Due Date: by 11:59pm, Monday, Sept 12

BACKGROUND

Sergei Mikhailovich

Prokudin-Gorskii (1863-1944) was a man well ahead of his time. Convinced, as early as 1907, that color

photography was the wave of the future, he won Tsar’s special permission

to travel across the vast Russian Empire and take color photographs of everything

he saw. And he really photographed everything: people, buildings, landscapes, railroads,

bridges…thousands of color pictures! His idea was simple: record three

exposures of every scene onto a glass plate using a red, a green, and a blue

filter. Never mind that there was no way to print color photographs until much

later – he envisioned special projectors to be installed in

“multimedia” classrooms all across

OVERVIEW

The goal of this assignment is

to take the digitized Prokudin-Gorskii glass plate images such as this one and,

using image processing techniques, automatically produce a color image with as

few visual artifacts as possible. In

order to do this, you will need to extract the three color channel images,

place them on top of each other, and align them so that they form a single RGB

color image. We will assume that a

simple x,y translation model is sufficient for proper alignment. However, the full-size glass plate images are

very large, so your alignment procedure will need to be relatively fast and

efficient.

DETAILS

A few

of the digitized glass plate images (both hi-res and low-res versions) will be placed

in the following directory (note that the filter order from top to bottom is

BGR, not RGB!): data/.

Your program will take a glass plate image as input and produce a single

color image as output. The program

should divide the image into three equal parts and align the second and the

third parts (G and R) to the first (B).

For each image, you will need to print the (x,y) displacement vector

that was used to align the parts.

A few

of the digitized glass plate images (both hi-res and low-res versions) will be placed

in the following directory (note that the filter order from top to bottom is

BGR, not RGB!): data/.

Your program will take a glass plate image as input and produce a single

color image as output. The program

should divide the image into three equal parts and align the second and the

third parts (G and R) to the first (B).

For each image, you will need to print the (x,y) displacement vector

that was used to align the parts.

The

easiest way to align the parts is to exhaustively search over a window of

possible displacements (say [-15,15] pixels), score each one using some image

matching metric, and take the displacement with the best score. There is a

number of possible metrics that one could use to score how well the images

match. The simplest one is just the L2 norm also known as the Sum of Squared

Differences (SSD) distance which is simply sum((image1-image2).^2). Another is normalized correlation. Note that in this particular case, the images

to be matched do not actually have the same brightness values (they are

different color channels), so a cleverer metric might work better.

Exhaustive

search will become prohibitively expensive if the pixel displacement is too

large (which will be the case for high-resolution glass plate scans). In this case, you will need to implement a

faster search procedure such as an image pyramid. An image pyramid represents the image at

multiple scales (usually scaled by a factor of 2) and the processing is done

sequentially starting from the coarsest scale and going down. It is very easy

to implement by adding recursive calls to your original single-scale

implementation.

The

above directory has skeleton Matlab code that will help you get started.

BELLS & WHISTLES

Using

your digital camera, try to repeat Prokudin-Gorskii’s approach to color

photography. Switch your camera to

black&white mode (or use rgb2gray in Matlab), and take 3 version of the

same scene (helpful to use tripod) with three different filters, roughly corresponding

to Red, Green, and Blue. Don’t worry too much about the quality of the

filters (these plastic binder separators might work fine). Make a composite from the three color

channels and compare it to the real color photograph. In general, your filters won’t

correspond to real RGB so your colors will be off. Consider a way to use a known object in the

scene to calibrate your filters and make them correspond to the correct colors

(e.g. white balance).

The borders of the photograph

will have strange colors since the three channels won’t exactly

align. See if you can devise an

automatic way of cropping the border to get rid of the bad stuff. The idea is that, the information in the good

parts of the image generally agrees across the color channels, whereas at

borders it does not.

SCORING

The

assignment is worth 100 points. You will

get 60 points for a single scale implementation demonstrating successful

results on the low resolution images.

You will get 40 more points for a multiscale pyramid implementation,

showing that you can handle larger input images (depending on the memory of

your machine, you might still not be able to run on the full resolution images,

in which case, show results on an intermediate resolution that you machine can

handle). Up to 20 points of extra credit will be assigned for any Bells and

Whistles (either suggested or your own).

WHAT TO TURN IN

You

will need to create a web page showing the results of this assignment and

describing any of the extras that you have done. Show your results on all images that were

provided, plus a few others of your own choosing from the LoC collection. Additionally, you will need to hand in all of

your code to a specified directory (not publicly readable). I will have more information about the

appropriate directories for the web page and the code.

![[SCS dragon logo]](../../scslogo.gif)