|

|

|

|

|

Programming Assignment #4 15-463:

Rendering and Image Processing |

Fun with Image

Stacks

Due Date:

by 11:59pm, Tu, Nov 23

OVERVIEW

In this assignment, you

will explore one of the various uses of image stacks in computer graphics. You will be able to choose one of a list of

projects to implement. In all cases, the

image acquisition process will be a major part of the assignment. You will need to be able to acquire good

images or video under controlled conditions to demonstrate good performance on

these methods (using a tripod will be essential for capturing image stacks –

make sure that your camera does not wobble).

Each of the projects will involve reading and understanding a research

paper and doing some minor programming to achieve the desired result. The projects are presented from the most

well-defined to the most free-style. Choose the project that strikes your fancy:

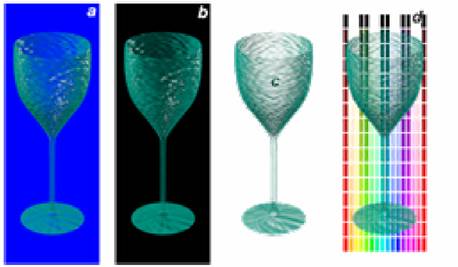

TRIANGULATION MATTING AND COMPOSITING

Pick

an interesting, semitransparent object and produce an alpha matte by shooting

the object against two different backgrounds. That is, given the four images C1,

C2 (object against two backgrounds) and Ck1, Ck2

(just the backgrounds), compute the image of the object Co and the corresponding

alpha matte. This can be done simply by

solving a system of linear equations on Slide 15 of the Matting lecture. The only trick to remember is that in solving

for the unknowns, it’s very useful here to consider the colors of Co

to be premultiplied by the alpha, e.g. Co =

[aR, aG, aB].

While

the backgrounds can be any images at all, we advise to use uniform colors since

the refraction (which we are not modeling) in some transparent objects can

change the pixel correspondence between foreground and background shots. Another consideration is having enough light

in the scene to avoid noise in the resulting images (but be careful not to

create too many shadows). Make sure that

you take all the images under the same settings (set camera to manual mode).

After successfully acquiring an object

with its matte, you will composite the object into a novel (and interesting!)

scene. For example, you can put a giant coke

bottle at the entrance to Wean Hall. Since

the orientation of the camera will most likely be different for your object and

the novel scene, you will need to compute a homography

and warp one of the images using code from the Mosaicing

project. Put a square somewhere when you

capture your object, and another one on the ground of

the target composite scene (or use one of the square-like tiles on the ground).

Use these 4->4 points to estimate a homography

VIDEO TEXTURES

Implement a simple version of

the Video Textures paper. Acquire a video sequence of some repeatable

phenomenon, and compute transitions between frames of this sequence so that it

can be run forever. Don’t worry

about the fancy stuff like blending/warping and Q-learning for detecting

dead-ends. Just precompute

a set of good transitions and jump between them randomly (but always take the

last good transition of the sequence to avoid dead ends).

The most challenging part of this

project is dealing with video, which is not very easy. Generally, pick a simple video texture for

which you don’t need a lot of video data (30 sec. at most). Processing video in Matlab is a bit tricky. Theoretically, there is aviread but, under linux, it

will only read uncompressed AVIs. Most current

digital cameras produce video in DV AVI format. One way to deal with this

is to splice up the video into individual frames and then read them into Matlab one by one. On the graphics cluster, you can

do (some variant of) the following to produce the frames from a video:

mplayer -vo jpeg -jpeg quality=100 -fps 30 mymovie.avi

Also

note that handling video is a time-consuming thing (not just for you, but for

the computer as well). If you shoot a minute of video, that’s

already 60*30=1800 images! So, start early and don’t be afraid to

let Matlab crunch numbers overnight.

RECOVERING HIGH DYNAMIC RANGE IMAGES

Follow the procedure outlined in the Debevec paper to recover a HDR image from a set of images

with varying exposure. You will need to carefully

read and completely understand the paper first but then the implementation is

straightforward (you can use the Matlab code in the

paper and shown in lecture). Your result should be a radiance map of the image.

When acquiring the images, make sure

that you only vary the shutter speed and not the aperture (switch the camera to

fully manual mode). Also, try to experiment with some of the simple

tone-mapping approaches, like global scaling, taking the first 0…255

values, the global operator presented in class (L/(1+L)). Which one works best for you?

FLASH – NO FLASH PHOTOGRAPHY

Implement a simple

version of the flash/no flash approach discussed in class. Don’t worry about handling shadows. Just see if you can get the whole

high-detail, low-detail machinery working using the bilateral filter. Read the

two papers and see which stuff you think is important and which could be

dropped. You are largely on your own

here – your homework will be judged on how good your results look.

HAND IN

To get full credit, you will need to implement one

of the above algorithms. As usual,

create a web page describing what you did and showing results. Describe some of the non-trivial steps you

took in your solution (e.g. what was the matrix for which you computed the

least-squares solution in the Matting project).

You

will also need to hand in a proposal for your final project of the semester. This could be an improvement of one of the

projects we did in class, or something completely new.

We will discuss this more in

class. The proposal should go onto a separate

web page (in the “final” directory).

Start early and good luck!

![[SCS dragon logo]](../../scslogo.gif)