Automatic Panorama Generation

Miklos Bergou (mbergou@andrew.cmu.edu) 15-463 Final Project

In this project, I chose to extend Project 2 by making the panorama generation fully automatic and also improve the blending scheme. In order to align the panoramas automatically, feature points had to be detected and matched for the input images. To do this, the following procedure was done:

Step 1: Feature Point Extraction

For each input image, the following images Ix, Iy, Ix*Iy, Ix2, Iy2, which represent derivates in both directions across the image and their various products. Then, these were blurred using a Gaussian filter to reduce noise. For each pixel, the following matrix was then computed:

|Ix2 IxIy|

|IxIy Iy2 |

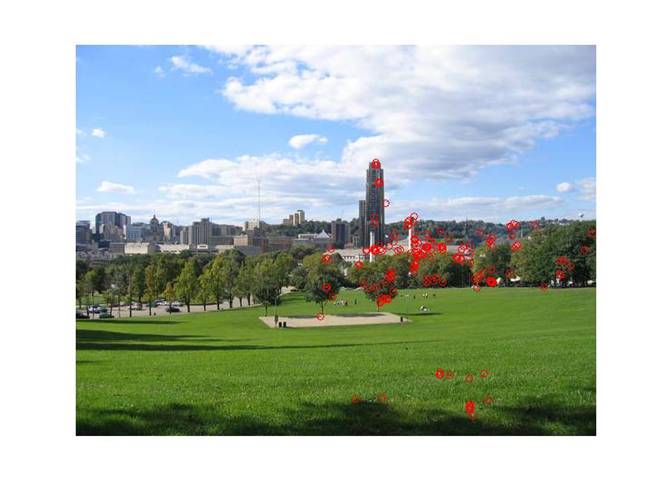

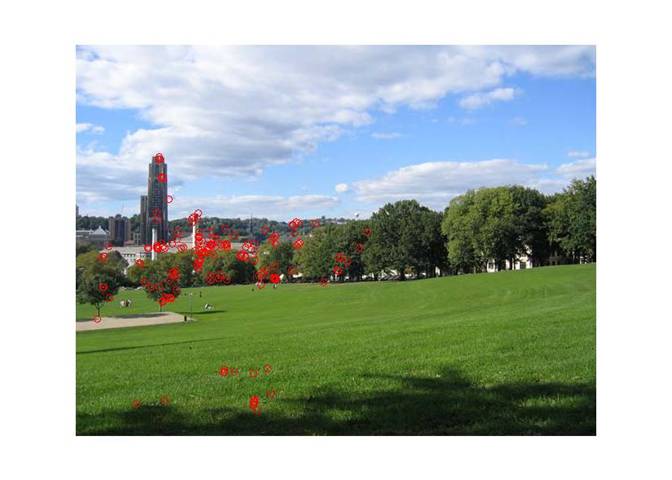

The eigenvalues of this matrix were then calculated and compared. If both eigenvalues were large and the ratio between them was close to 1, then the pixel was marked as a feature point. Generally, for an input image of dimensions 640x480, about 3k to 12k feature points would be found, depending on what the scene looked like. Here are a few results for what feature points were found.

Step 2: Feature Point Matching

Once these feature points were identified for each image, they had to be matched against feature points in the other images to see how the images lined up next to each other. To do this, first a simple brute force comparison of each feature point to each other feature point in the adjacent images was done to find likely matches. This step took very long since the search was O(n2) in the number of feature points and Matlab does not do well with loops. This step produced many false matches, however, since many of the patches around different features still looked similar. In order to eliminate these false matches, RANSAC (RANdom SAmple Consensus) filtration was used. This technique just takes subsets of the matching points (I used 5 points per image pair) and computes a homography between the images based on those 5 points. It then checks to see how many of the other matching points line up based on that homography. The assumption is that if most of the matching points are actually valid, then they will tend to line up more often than the false matches. Thus, I just looked at which points lined up most often and took those to be the true matches. This generally did well, except in some scenes with a lot of repetition, such as some architecture, in which many of the feature points looked identical to other ones. Here are examples of matching feature points for some image pairs. In general, between 100 and 200 matching points were found.

Step 3: Blending

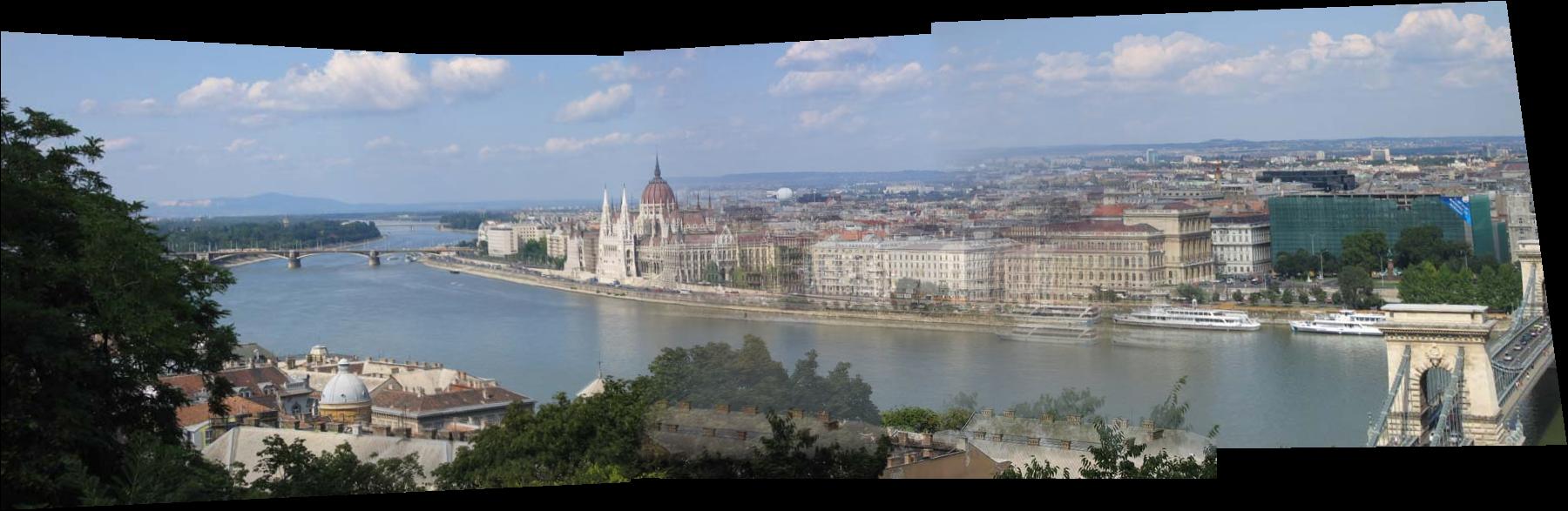

Once all of the matches have been found, the images can be lined up using homographies based on all of the matching feature points for each image pair. In order to hide the seams between the images, they had to be blended. I implemented a two level blending scheme, which used a Gaussian layer and a Laplacian layer. When two images had to be blended together, a Guassian blurred image was computed for each and the Laplacian for each was simply taken as the original minus the blurred version. The Gaussian layers were blended using linear alpha values that were taken as the distance of the pixel to the nearest invalid pixel in the image. The Laplacian was blended by simply picking the pixel from the image with the higher alpha value. Once both of these were done, the final composite was simply the sum of the Gaussian layer and the Laplacian layer. The results of the whole process are shown below for a few different panoramas.

The algorithm did well on these input images since the feature points could be matched well. The panorama shown below, however, is not very good on the right side because a lot of points were chosen to be matches that were not actually matches. This is due to the fact that the scene has a lot of repetition in the buildings, such as the windows, so sometimes the wrong windows would be matched together, causing the homography to be incorrect. The filtration method used was not able to detect these since they happened to frequently. Perhaps if a bigger patch around each feature point were considered when looking for matches, then this scene would have looked better.