Abe Wong (arwong.spamalope@andrew.cmu.edu)

The goal of this project was to improve my Project 4 Flash - No Flash Photography code. Intrinsic relighting is interesting to me because it seems like it has the most practical use to me outside this course. My work was limited by difficulty in generalizing the algorithms in Eisemann & Durand 2004 and by time constraints.

I attempted to do several things:

Because setting up a tripod can be a pain, I wanted to implement automatic registration to align a flash and a no-flash photograph taken of basically the same thing.

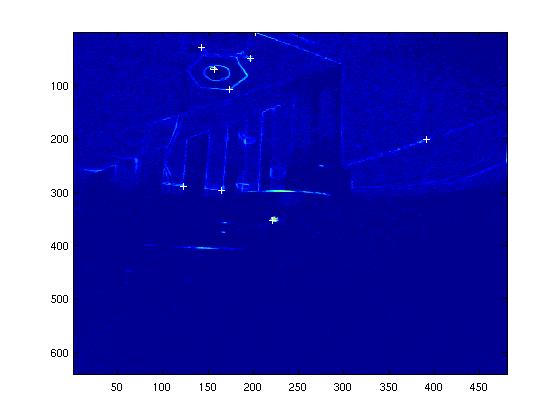

Because of the vastly different lighting conditions of the two images, I performed all operations over the derivative. Features were detected using a Harris corner detector on the no-flash image. The no-flash image was used because it should have fewer features than the flash image, which if used would attempt to match a lot of nonexistant features in the no-flash image. Matching was performed by normalized correlation over a small window of the flash image. The convolution response was further weighted by a gaussian, which is somewhat visible in the feature image below.

No flash features

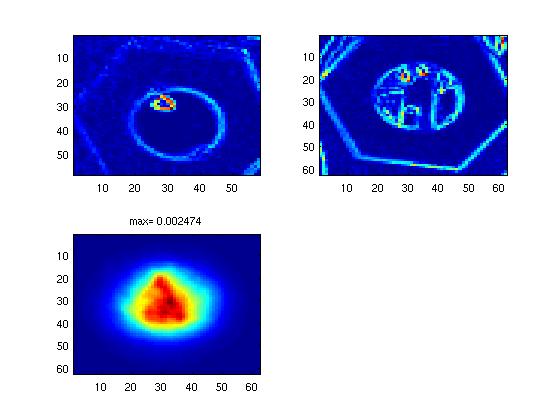

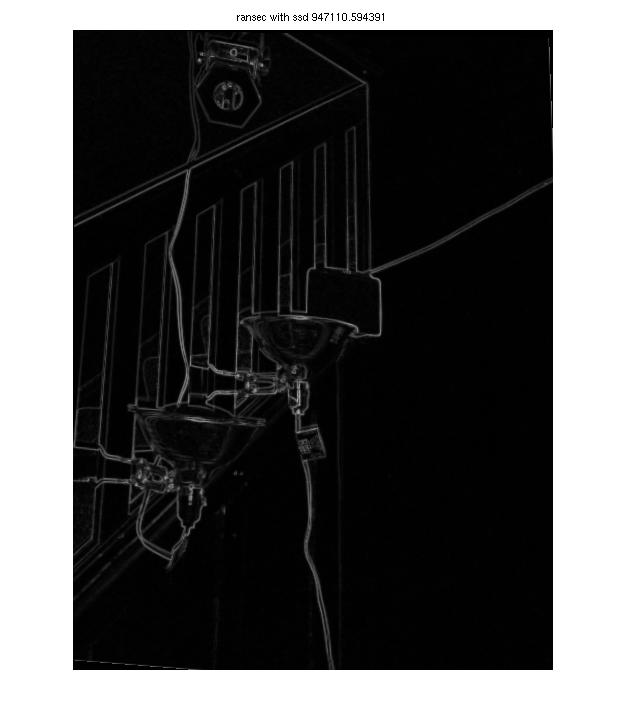

I attempted to filter out bad points by selecting a random few, transforming the flash gradient based on the homography, and then computing a simple SSD with the no-flash gradient. While this was not terrible at the task, it still produced a fair number of errors and the not uncommon completely wrong result. The most obvious flaw is that the gradient images are essentially edge maps, which don't have substantial features in between. I was, however, limited by the substantial difference in pixels between flash and no-flash images. I spent some time trying to improve this code but spent the brunt of my time on shadows and used a tripod for the other images here.

It may have been a better idea to use a real RANSAC algorithm and match points not image data, although I'd briefly experimented with one with Project 2 with poor results. Perhaps using SIFT descriptors would have worked better as well, although I'm not sure how they would respond to the lighting disparity.

Selection of good feature matches.

Because shadows from the flash can introduce bad color information and false details, it is desirable to identify shadows and then correct their coloring.

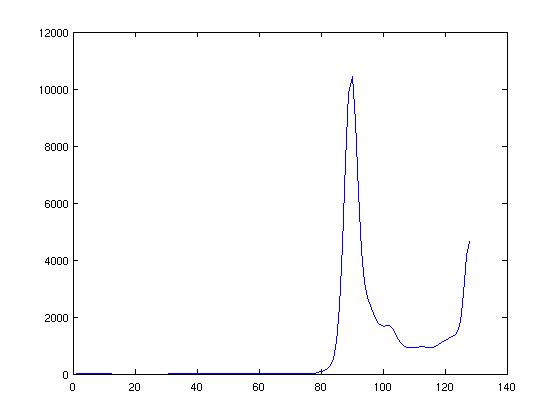

Umbra detection as described in Eisemann & Durand works reasonably for many images. The idea is to take the difference between image intensities and detect a threshold below which all pixels are marked as shadow. A histogram is created of lower difference values, and the largest local minimum is selected.

This alone selects many pixels which are not technically shadows, such as the dark sky and background, but these pixels are sufficiently dark not to matter, especially with certain minimum thresholds for color correction.

In some cases, however, entire objects are selected as shadow, which leads to poor results when corrected (see below). I tried several methods of eliminating this problem at the shadow detection phase, such as different intensity calculations, and masks with the saturation channel which tried to filter out medium-lightness high saturation pixels. Neither worked particularly well.

I spent some time attempting to figure out the best way to compute the intensity channel, primariy focusing on MATLAB's rgb2gray(), taking the luminosity channel out of the image in HSV space, and a ratio-based method discussed briefly in Eisemann & Durand. I've found that none produce superior results across the board, and it's mostly true that methods are best matched on a per-image basis.

Threshold on intensity difference from intensity difference.

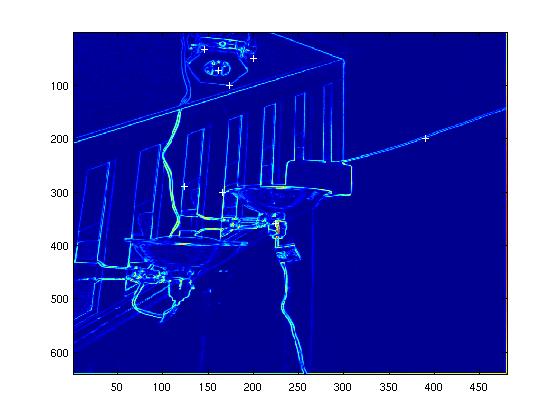

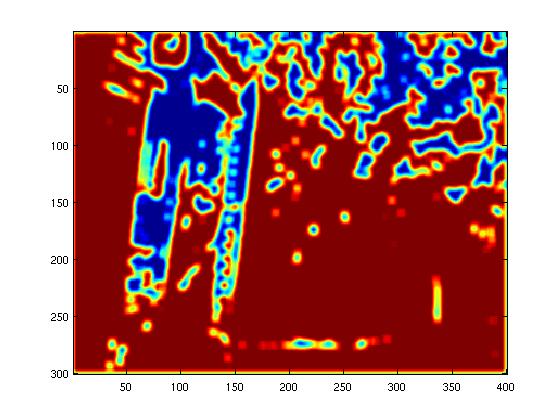

Penumbras are detected as pixels connected to umbra pixels which also have a strong gradient. The assumption here is that, because penumbras are soft, they have a gradient which is not present in the no-flash photo. A hard threshold on the gradient difference was combined with a dilated umbra to get the penumbras. This works moderately well but is limited by its connectedness to umbras.

Dilated umbra

Of course, there exist shadows which are pure penumbra with no connectedness. For these Eisemann & Durand apply a box filter over the gradient difference and pick large values, with the rationale that these pixels have a large number of neighbors which are also potentially soft shadows.

I used a gaussian filter rather than a box filter mostly out of preference; I found nearly no difference. The results were fairly poor, however. While some significant shadows were detected which did not appear using the above methods, this itself was far from perfect and the algorithm further produced huge shadow artifacts due to lighting, noise, and perhaps other things.

Filter over gradient

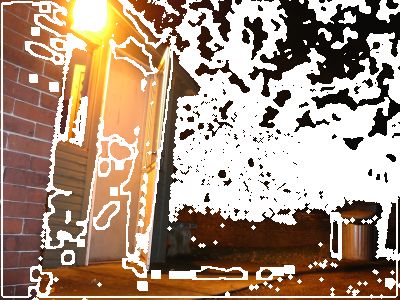

Eisemann & Durand use a method of color selection similar to the bilateral filter, which takes an average of non-shadow flash-photo pixels, weighted by their similarity in the no-flash image and their pixel distance to the pixel to be corrected. This worked moderately well in some cases, but failed in many, particularly where the bilateral filter is prone -- over detail, which it blurs by nature, and over softer edges. The below sample image performed poorly originally; it picked up a strong glow around the edges of the building. While there is some glow in the no-flash image due to various lighting effects, the problem was that an insufficient quanity of non-shadow pixels were nearby to create a good color, as in the sky/tree area of the sample image. I corrected this issue by adding to the color average a spatially small, lightly-weighted portion of shadow colors.

Window of flash image

Window of flash image Window of no-flash image

Window of no-flash image Window of shadow mask

Window of shadow mask Weight map for non-shadowed pixels

Weight map for non-shadowed pixels Weight map for shadowed pixels.

Weight map for shadowed pixels.Results are heavily skewed by problems with the automatic shadow detection, which introduce rather horrible artifacts in the detail layer of the image that go on to appear in the composited flash - no flash image.

Note the below images, where a dark red pipe is badly blurred out in the shadow-corrected image, and wall color has bled into the dark air duct near the top of the image. This is the fault of shadow detction.

It seems more likely that texture synthesis (i.e., Efros et al) would have performed much better in correcting these pixels while preserving detail and even potentially edges.

Unfortunately I found little of my new work effective enough to be used on photos I had taken. I still composited them, and here are some of the better looking ones. Note how these images have a slightly unnatural glow and sometimes blur to them, which is the natural result of the bilateral filter. If I were to expand this work this is the first thing I would do, because it seems like it shows the most promise.