Learning Silhouette Features for Control of Human Motion

| Liu Ren | Gregory Shakhnarovich | Jessica K. Hodgins and Hanspeter Pfister | Paul Viola |

ACM Transactions on Graphics (2005)

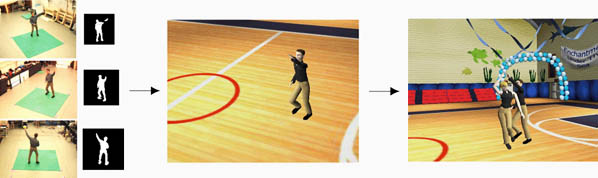

We present a vision-based performance interface for controlling animated human characters. The system combines information about the user's motion contained in silhouettes from several viewpoints with domain knowledge contained in a motion capture database to interactively produce a high quality animation. Such an interactive system will be useful for authoring, teleconferencing, or as a control interface for a character in a game. In our system, the user performs in front of three video cameras; the resulting silhouettes are used to estimate his or her orientation and body configuration based on a set of discriminative local features. Those features are selected by a machine learning algorithm during a preprocessing step. Sequences of motions that approximate the user's actions are extracted from the motion database and scaled in time to match the speed of the user's motion. We use swing dancing, an example of complex human motion, to demonstrate the effectiveness of our approach and compare the results obtained with discriminative local features to those obtained with global features, Hu moments, and to ground truth measurement from a motion capture system.

Liu Ren, Gregory Shakhnarovich, Jessica K. Hodgins and Hanspeter Pfister, Paul Viola (2005). Learning Silhouette Features for Control of Human Motion. ACM Transactions on Graphics, 24(4).

@article{Ren:2005:LSF,

author = {Liu Ren and Gregory Shakhnarovich and Jessica K. Hodgins and

Hanspeter Pfister and Paul Viola},

title = {Learning Silhouette Features for Control of Human Motion},

year = {2005},

month = oct,

journal = "ACM Transactions on Graphics",

volume = "24",

number = "4",

publisher = {ACM Press},

}