3D reconstruction with fast dipole sums

| Hanyu Chen | Bailey Miller | Ioannis Gkioulekas |

ACM Trans. Graph. (2024)

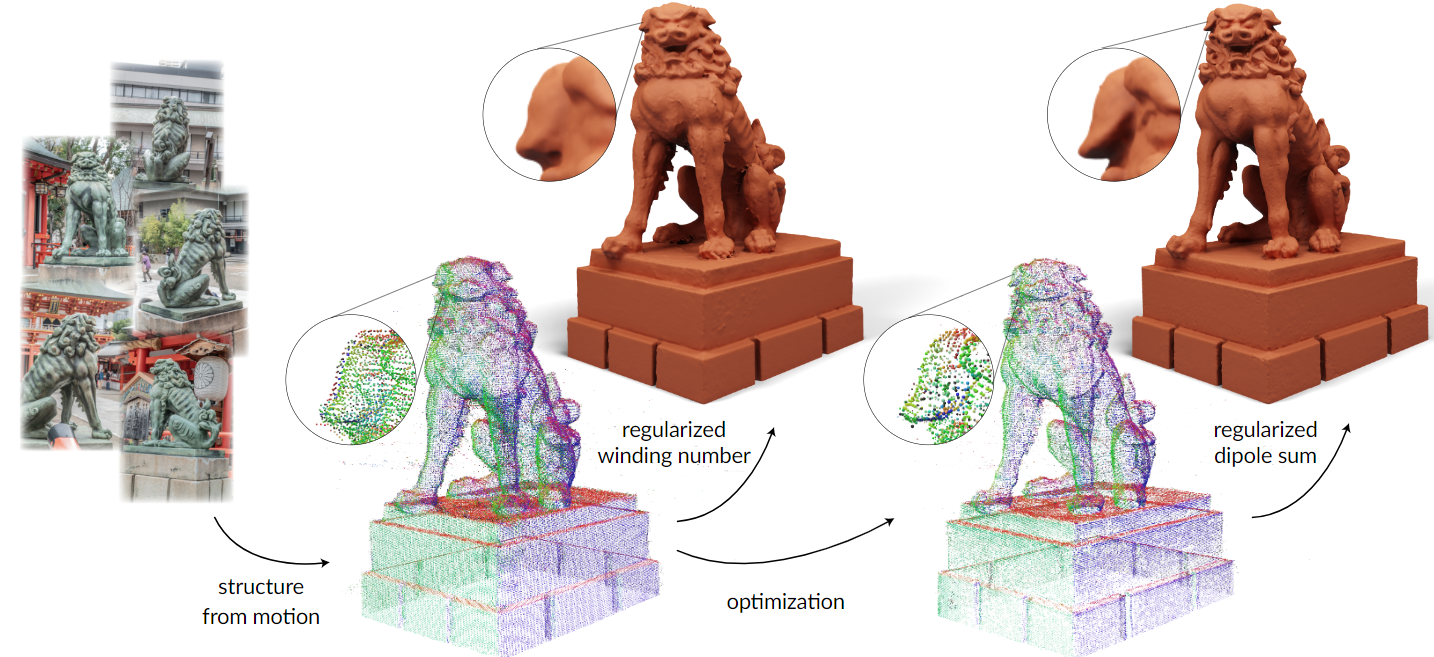

We introduce a method for high-quality 3D reconstruction from multi-view images. Our method uses a new point-based representation, the regularized dipole sum, which generalizes the winding number to allow for interpolation of per-point attributes in point clouds with noisy or outlier points. Using regularized dipole sums, we represent implicit geometry and radiance fields as per-point attributes of a dense point cloud, which we initialize from structure from motion. We additionally derive Barnes-Hut fast summation schemes for accelerated forward and adjoint dipole sum queries. These queries facilitate the use of ray tracing to efficiently and differentiably render images with our point-based representations, and thus update their point attributes to optimize scene geometry and appearance. We evaluate our method in inverse rendering applications against state-of-the-art alternatives, based on ray tracing of neural representations or rasterization of Gaussian point-based representations. Our method significantly improves 3D reconstruction quality and robustness at equal runtimes, while also supporting more general rendering methods such as shadow rays for direct illumination.

Hanyu Chen, Bailey Miller, Ioannis Gkioulekas (2024). 3D reconstruction with fast dipole sums. ACM Trans. Graph..

@article{Chen:2024:FDS,

author = {Hanyu Chen, Bailey Miller, Ioannis Gkioulekas},

title = {3D reconstruction with fast dipole sums},

journal = {ACM Trans. Graph.},

year = {2024},

publisher = {ACM},

address = {New York, NY, USA},

}