Ablating Concepts in Text-to-Image Diffusion Models

| Nupur Kumari | Bingliang Zhang | Sheng-Yu Wang | Eli Shechtman | Richard Zhang | Jun-Yan Zhu |

IEEE International Conference on Computer Vision (ICCV) (2023)

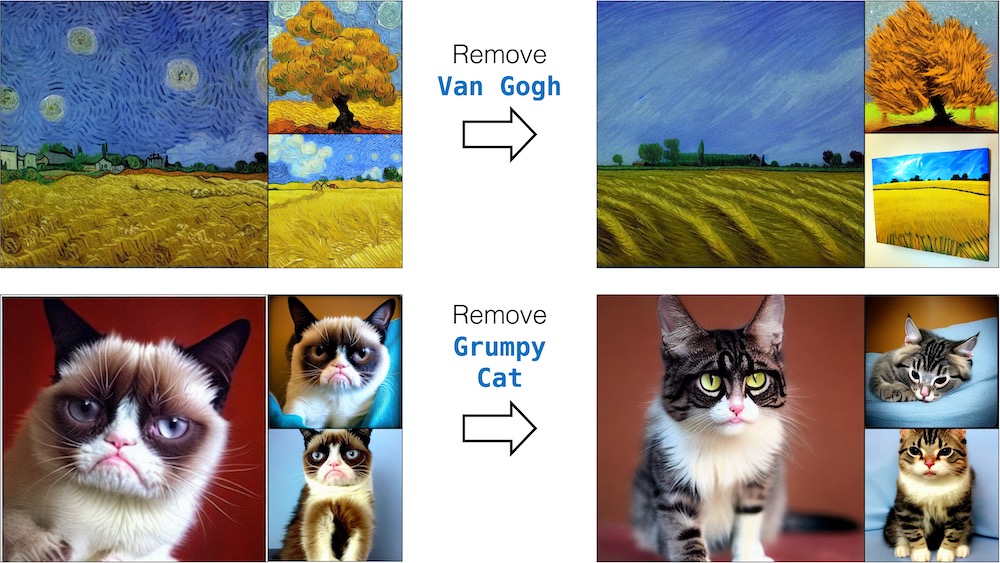

Large-scale text-to-image diffusion models can generate high-fidelity images with powerful compositional ability. However, these models are typically trained on an enormous amount of Internet data, often containing copyrighted material, licensed images, and personal photos. Furthermore, they have been found to replicate the style of various living artists or memorize exact training samples. How can we remove such copyrighted concepts or images without retraining the model from scratch? To achieve this goal, we propose an efficient method of ablating concepts in the pretrained model, i.e., preventing the generation of a target concept. Our algorithm learns to match the image distribution for a target style, instance, or text prompt we wish to ablate to the distribution corresponding to an anchor concept. This prevents the model from generating target concepts given its text condition. Extensive experiments show that our method can successfully prevent the generation of the ablated concept while preserving closely related concepts in the model.

Nupur Kumari, Bingliang Zhang, Sheng-Yu Wang, Eli Shechtman, Richard Zhang, Jun-Yan Zhu (2023). Ablating Concepts in Text-to-Image Diffusion Models. IEEE International Conference on Computer Vision (ICCV).

@inproceedings{kumari2023conceptablation,

author = {Nupur Kumari and Bingliang Zhang and Sheng-Yu Wang and Eli Shechtman and Richard Zhang and Jun-Yan Zhu},

title = {Ablating Concepts in Text-to-Image Diffusion Models},

booktitle = {IEEE International Conference on Computer Vision (ICCV)},

year = {2023},

}