Sketch Your Own GAN

| Sheng-Yu Wang | David Bau | Jun-Yan Zhu |

Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (2021)

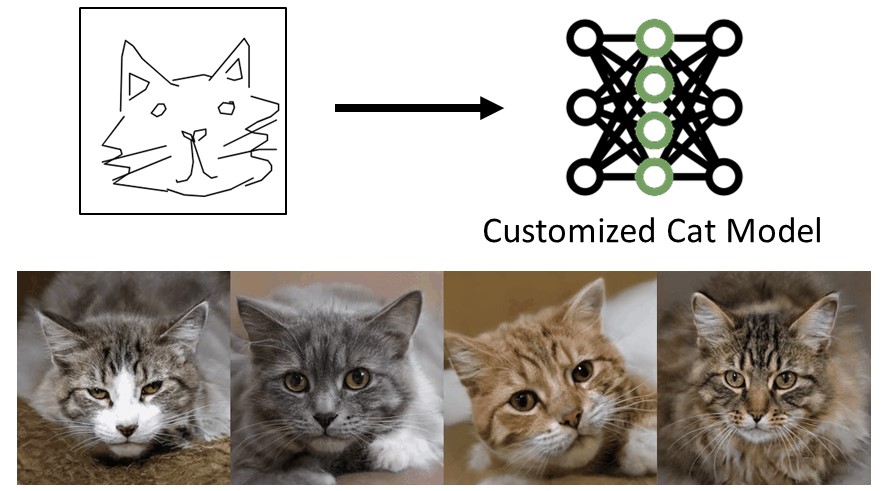

Can a user create a deep generative model by sketching a single example? Traditionally, creating a GAN model has required the collection of a large-scale dataset of exemplars and specialized knowledge in deep learning. In contrast, sketching is possibly the most universally accessible way to convey a visual concept. In this work, we present a method, GAN Sketching, for rewriting GANs with one or more sketches, to make GANs training easier for novice users. In particular, we change the weights of an original GAN model according to user sketches. We encourage the model’s output to match the user sketches through a cross-domain adversarial loss. Furthermore, we explore different regularization methods to preserve the original model's diversity and image quality. Experiments have shown that our method can mold GANs to match shapes and poses specified by sketches while maintaining realism and diversity. Finally, we demonstrate a few applications of the resulting GAN, including latent space interpolation and image editing.

Sheng-Yu Wang, David Bau, Jun-Yan Zhu (2021). Sketch Your Own GAN. Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV).

@inproceedings{wang2021sketch,

author = {Sheng-Yu Wang and David Bau and Jun-Yan Zhu},

title = {Sketch Your Own GAN},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

}