A perceptual control space for garment simulation

| Leonid Sigal | Moshe Mahler | Spencer Diaz | Kyna McIntosh | Elizabeth Carter | Timothy Richards | Jessica Hodgins |

ACM Transactions on Graphics (August 2015)

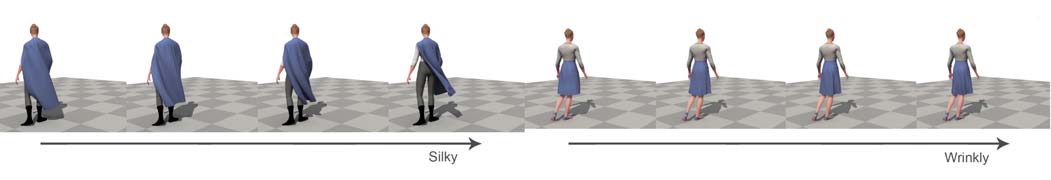

We present a perceptual control space for simulation of cloth that works with any physical simulator, treating it as a black box. The perceptual control space provides intuitive, art-directable control over the simulation behavior based on a learned mapping from common descriptors for cloth (e.g., flowiness, softness) to the parameters of the simulation. To learn the mapping, we perform a series of perceptual experiments in which the simulation parameters are varied and participants assess the values of the common terms of the cloth on a scale. A multi-dimensional sub-space regression is performed on the results to build a perceptual generative model over the simulator parameters. We evaluate the perceptual control space by demonstrating that the generative model does in fact create simulated clothing that is rated by participants as having the expected properties. We also show that this perceptual control space generalizes to garments and motions not in the original experiments.

Leonid Sigal, Moshe Mahler, Spencer Diaz, Kyna McIntosh, Elizabeth Carter, Timothy Richards, Jessica Hodgins (August 2015). A perceptual control space for garment simulation. ACM Transactions on Graphics, 34(4).

@article{Hodgins:2017:DOE,

author={Leonid Sigal, Moshe Mahler, Spencer Diaz, Kyna McIntosh, Elizabeth Carter, Timothy Richards, Jessica Hodgins},

title={A perceptual control space for garment simulation},

journal={ACM Transactions on Graphics},

volume={34},

number={4},

year={August 2015},